Big data has become an integral part of the digital world, and its importance cannot be overstated. As data continues to grow exponentially, businesses are looking for ways to harness its power and make informed decisions. This is where Big Data Hadoop comes in. In this article, we will explore the world of Big Data Hadoop and its importance.

Big Data Hadoop is an open-source framework that allows for the storage and processing of large data sets across clusters of computers. It is designed to handle massive amounts of data and can scale up or down depending on the size of the data set. Hadoop is used by many large organizations like Facebook, Google, and Yahoo for data processing, analysis, and storage.

Key features of Big Data Hadoop include:

- Distributed storage and processing

- Scalability

- Cost-effective

- Fault-tolerance

- Flexibility

- Ability to handle unstructured data

Why is Big Data Hadoop important?

Big Data Hadoop provides a solution to the challenges of traditional data processing systems. It is capable of processing large volumes of data, both structured and unstructured, and enables organizations to make informed decisions based on this data. Hadoop is also cost-effective, as it allows for the use of commodity hardware, eliminating the need for expensive specialized hardware.

Benefits of using Big Data Hadoop include:

- Allows for real-time data processing and analysis

- Scalable to handle large volumes of data

- Cost-effective

- Flexible and can handle various data types

- Enables better decision-making

How does Big Data Hadoop work?

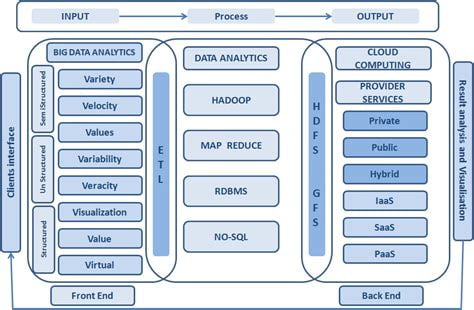

Big Data Hadoop uses a distributed file system called Hadoop Distributed File System (HDFS) to store data across multiple nodes in a cluster. The data is processed using a programming model called MapReduce, which allows for parallel processing of data across multiple nodes in a cluster. The MapReduce model consists of two functions: map and reduce.

MapReduce functions:

- Map: This function takes a set of data and converts it into key-value pairs.

- Reduce: This function takes the output of the map function and combines the data based on the key.

FAQ

What is the difference between Hadoop and traditional relational databases?

Hadoop is designed to handle unstructured and semi-structured data, while traditional relational databases are designed to handle structured data. Hadoop is also more scalable and cost-effective than traditional relational databases.

What kind of data can be processed using Hadoop?

Hadoop can handle both structured and unstructured data, including text, images, videos, and audio files.

Is Hadoop difficult to learn?

Learning Hadoop can be challenging, but there are many resources available online to help you get started, including tutorials, videos, and online courses.

What are the advantages of using Hadoop?

The advantages of using Hadoop include real-time data processing, scalability, cost-effectiveness, flexibility, and better decision-making.

Is Hadoop used by large organizations?

Yes, Hadoop is used by many large organizations like Facebook, Google, and Yahoo for data processing, analysis, and storage.

Can Hadoop be used for real-time data processing?

Yes, Hadoop can be used for real-time data processing using tools like Apache Storm and Apache Spark.

Pros

Big Data Hadoop is highly scalable, cost-effective, and flexible, making it a popular choice for handling large volumes of data. It enables organizations to make informed decisions based on real-time data processing and analysis, improving business outcomes.

Tips

If you’re new to Big Data Hadoop, it’s important to start with the basics and work your way up. There are many online resources available to help you learn, including tutorials, videos, and online courses. It’s also important to have a clear understanding of your organization’s data needs and how Hadoop can help meet those needs.

Summary

Big Data Hadoop is an open-source framework that allows for the storage and processing of large data sets across clusters of computers. It is designed to handle massive amounts of data and enables organizations to make informed decisions based on real-time data processing and analysis. Hadoop is highly scalable, cost-effective, and flexible, making it a popular choice for handling large volumes of data.

Eltupe Technology And Software Updates

Eltupe Technology And Software Updates