Introduction

Welcome, fellow technology enthusiasts, to an exciting journey that explores the convergence of big data, cloud computing, and two powerful frameworks – Hadoop and Spark. In this article, we will delve into the world of data-driven insights and processing capabilities that have revolutionized industries and transformed how we extract value from voluminous datasets.

Pioneering journalist Carl Bernstein once said, “The lowest form of popular culture – lack of information, misinformation, disinformation, and a contempt for the truth or the reality of most people’s lives – has overrun real journalism.” Keeping this spirit alive, we embark upon our quest to understand the intricacies of big data and cloud computing with Hadoop and Spark.

Imagine a universe where vast volumes of data are generated every second – from social media interactions to sensor readings in smart cities. This mountain of information holds immense potential to shape decisions, drive innovation, and enhance operations across diverse sectors. However, harnessing the power of this data requires sophisticated technologies that can process, store, and analyze it effectively. This is where big data and cloud computing come into play.

Hadoop: Taming the Data Deluge

Hadoop, an open-source framework, emerges as the modern-day hero of big data processing. With its distributed file system (HDFS) and MapReduce programming paradigm, Hadoop enables the handling of massive datasets across clusters of commodity hardware. By breaking tasks into smaller, manageable chunks and distributing them over a network of machines, Hadoop ensures scalability, fault tolerance, and high-performance data processing.

Spark: Igniting Real-Time Analytics

While Hadoop revolutionized big data processing, Apache Spark takes it a step further by adding lightning-fast analytics capabilities. Spark’s in-memory computing allows for iterative processing, real-time data streaming, and interactive queries, making it ideal for processing large-scale datasets. Its unified data processing framework simplifies complex workflows and empowers organizations with timely insights that drive actionable outcomes.

Unleashing the Power of Big Data and Cloud Computing

The synergy between big data, cloud computing, Hadoop, and Spark creates endless possibilities across various industries. Let’s explore some of the strengths and weaknesses of these technologies:

Strengths of Big Data and Cloud Computing with Hadoop and Spark

1. Scalability

Big data and cloud computing bring together the ability to scale horizontally by distributing data and processing across multiple machines. Hadoop and Spark empower organizations to handle ever-growing datasets without compromising performance.

2. Cost-Effective Infrastructure

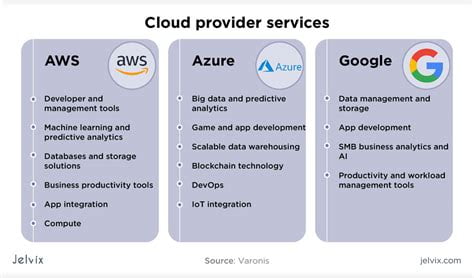

Cloud computing enables organizations to shift from capital-intensive investments in hardware to more cost-effective pay-as-you-go models. Leveraging cloud platforms like Amazon Web Services (AWS) or Microsoft Azure, enterprises can access extensive computing resources on-demand, reducing overall infrastructure costs.

3. Real-time Data Processing

With traditional data processing frameworks, analyzing real-time data can be challenging. However, Spark’s in-memory computing capabilities enable near-real-time data processing, allowing organizations to gain actionable insights within seconds or minutes.

4. Flexibility and Compatibility

Hadoop and Spark support a wide range of data formats, making them compatible with various data sources. They provide flexibility in ingesting, processing, and analyzing structured, semi-structured, and unstructured data, enabling organizations to leverage diverse data types for decision-making.

5. Advanced Analytics

Spark’s extensive libraries for machine learning, graph processing, and SQL-like querying open doors to advanced analytics. Organizations can build sophisticated models, uncover patterns, forecast trends, and make data-driven predictions, thereby unlocking valuable insights.

6. Fault Tolerance

Hadoop’s distributed nature ensures fault tolerance by replicating data across multiple nodes. In case of machine failures or network issues, data replicas are readily available, preventing any loss or disruption in data processing.

7. Data Security and Privacy

Cloud computing platforms invest heavily in robust security measures and compliance standards, assuring data privacy and protection. Encryption, access controls, and data separation mechanisms promote secure data handling within the cloud environment.

Weaknesses of Big Data and Cloud Computing with Hadoop and Spark

1. Steep Learning Curve

Implementing and managing big data frameworks like Hadoop and Spark often require specialized skills. Organizations may need to invest in training or hire experienced professionals to effectively leverage these technologies.

2. Complex Infrastructure Setup

Setting up a Hadoop or Spark cluster involves configuring and interconnecting multiple machines, which can be complex and time-consuming. Ensuring optimal performance, fault tolerance, and maintaining clusters pose technical challenges.

3. Data Security Concerns

While cloud computing platforms offer robust security measures, deploying sensitive or confidential data on external cloud servers still raises security concerns. Organizations must carefully evaluate security policies, encryption methods, and compliance regulations to mitigate risks.

4. Data Latency

Despite the real-time capabilities of Spark, certain use cases may require instantaneous or near-zero latency, which is challenging to achieve with cloud-based deployments. Processing data within strict time constraints remains a hurdle in some scenarios.

5. Cost Considerations

While cloud computing can offer cost benefits, organizations must carefully manage resource allocation and usage to avoid unexpected expenses. Data storage costs, data transfer fees, and high-performance computing instances can impact the overall budget.

6. Compatibility Challenges

Legacy systems or applications may not seamlessly integrate with big data frameworks like Hadoop or Spark. Organizations must plan for data migration, software compatibility, and potential disruptions during the adoption of these technologies.

7. Data Governance and Compliance

With the increasing volumes of data generated and processed, ensuring data governance, regulatory compliance, and maintaining data integrity becomes critical. Organizations must establish policies, monitor data usage, and comply with privacy regulations to protect sensitive information.

Frequently Asked Questions (FAQs)

1. What is the role of Hadoop in big data processing?

Hadoop provides a distributed file system (HDFS) and a programming paradigm called MapReduce. It allows processing and storage of massive datasets across clusters of machines, enabling scalability, fault tolerance, and efficient data processing.

2. How does Apache Spark differ from Hadoop?

While Hadoop focuses primarily on batch processing of big data, Spark provides real-time analytics capabilities. Spark’s in-memory computing and data caching allow iterative processing, streaming data analytics, and faster query performance.

3. How can organizations leverage big data and cloud computing?

Organizations can leverage big data and cloud computing to gain insights from large datasets, improve decision-making, enhance operational efficiency, enable predictive analytics, and enable cost-effective infrastructure scalability.

4. Are there any security risks associated with using cloud computing?

While cloud computing platforms offer robust security measures, there are inherent risks associated with data privacy, access controls, and potential data breaches. Organizations must assess security protocols, encryption methods, and compliance standards to mitigate these risks.

5. What kind of skills are required to work with Hadoop and Spark?

Working with Hadoop and Spark typically require knowledge of programming languages like Java or Scala, as well as understanding distributed computing concepts, data processing algorithms, and cluster management. Familiarity with SQL and machine learning is also beneficial.

6. Can big data frameworks handle unstructured data?

Yes, big data frameworks like Hadoop and Spark can handle unstructured data. They support various data formats and provide mechanisms to process both structured and unstructured data, enabling organizations to derive insights from diverse data sources.

7. How can organizations address the challenges of data security and privacy in the cloud?

To address data security and privacy concerns in the cloud, organizations can implement encryption methods, access controls, and data separation mechanisms. Regular audits, compliance with privacy regulations, and secure data handling practices are crucial to maintaining data security.

Conclusion

As we come to the end of our exploration into the realm of big data and cloud computing with Hadoop and Spark, it is evident that these technologies hold immense potential for shaping the future of data-driven decision-making. While they offer scalability, real-time analytics, and cost-effective infrastructure, they also come with challenges related to implementation, security, and compatibility. It is crucial for organizations to navigate these intricacies and harness the power of big data and cloud computing responsibly.

Are you ready to embark on your own big data journey? Seize this opportunity to embrace technology, unlock insights, and make a significant impact in your domain. Stay tuned for more updates and continue your quest to stay ahead in the ever-evolving world of data and technology.

Disclaimer: The views and opinions expressed in this article are solely those of the author and do not necessarily reflect the official policy or position of any agency or organization.

Eltupe Technology And Software Updates

Eltupe Technology And Software Updates